Family Face Recognition Tracking System in Python (Work in Progress)

Posted on Nov 14, 2024 @ 02:14 AM under Machine Learning Computer Vision

The Family Face Recognition Tracking System is a Python-based application designed to detect and track faces in real-time using a webcam. This application leverages a foundational face recognition model trained on images of my family members. It identifies known individuals and saves any unknown faces to the filesystem for later review. The system utilizes the face_recognition library for facial recognition, OpenCV for video processing, and various other libraries for performance and evaluation.

Table of Contents:

- Project Overview

- Setting Up the Environment

- Training the Model

- Validating the Model

- Running the Real-Time Application

- Conclusion

Project Overview

This face recognition application involves multiple key stages: training, validating, and real-time face recognition.

Here’s an overview of the key features:

- Training: I used a set of labeled images to train the model. Each image contains a person's face, and the model learns how to encode those faces as numeric vectors (feature representations). In the training folder, I created sub-folders with the name of each person in my household. In each of these sub-folders, I saved a number of images with different poses. The purpose of this is to try and get a variety of images with the same person. The output of the training will be a saved dictionary of names and encodings. The encodings are basically the numerical representations of the unique features of a face. This will be used to compare faces.

- Validation: After training the model, I then validated its accuracy using a separate set of images, ensuring that the model can correctly identify faces. The folder structure a bit different from the training set. I only created one validation folder with a mixture of images from the different individuals in my household.

- Real-time Recognition: Once the model is trained and validated, the application uses a webcam feed to recognize faces in real-time. If an unknown face is detected, it is stored on the fileysytem for later processing. All the code is kept in a main.py file.

The basic structure of the project is shown below:

├── encodings

│ └── encodings.pkl

├── main.py

├── README.md

├── requirements.txt

├── training

│ ├── sarah

│ │ ├── 20210227_121111_mfnr.jpg

│ │ ├── 20211225_155854.jpg

│ │ ├── 20220528_134618_001.jpg

│ │ ├── 20220611_125915.jpg

│ │ ├── IMG_20180920_171340.jpg

│ │ └── VID_20180904_105409.jpg

│ ├── mary

│ │ ├── 20210716_193927.jpg

│ │ ├── 20220530_124746.jpg

│ │ ├── IMG_20180827_184109.jpg

│ │ ├── IMG_20180908_115925.jpg

│ │ ├── IMG_20181002_171506.jpg

│ │ ├── IMG_20181011_185655.jpg

│ │ ├── IMG_20181021_180342.jpg

│ │ ├── IMG_20190125_070431.jpg

│ │ └── IMG_20190125_071537.jpg

│ ├── john

│ │ ├── 1234.jpg

│ │ ├── 20220604_125508.jpg

│ │ ├── 20220619_110337.jpg

│ │ ├── 20220825_162536.jpg

│ │ ├── 20221227_195318.jpg

│ │ ├── 20230515_105538.jpg

│ │ └── myimg.jpg

│ └── janet

│ ├── 20201002_202029.jpg

│ ├── 20220326_120424.jpg

│ ├── 20220423_191130.jpg

│ ├── 20220604_140517.jpg

│ ├── 20220729_102104.jpg

│ └── 20230514_202906.jpg

├── unknown_frames

│ └── unknown.jpg

├── validation

├── mary_1.jpg

├── sarah_1.jpg

├── john_1.jpg

├── john_2.jpg

├── john_3.jpg

└── janet_1.jpg

Setting Up the Environment

Before running the application, ensure that you have the necessary dependencies installed. The primary libraries to install are:

pip install face_recognition opencv-python scikit-learn matplotlib seaborn

Make sure you also have a Python version greater than 3.6 to ensure compatibility with the libraries. I used Python 3.12.7. There are other libraries that were used, but they should be installed by default on your system. To make sure. These are the imports. I will explain the use of each as we go through the code together.

import os

import sys

import face_recognition

import pdb

import pickle

import cv2

from collections import Counter

import argparse

import threading

import queue

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.metrics import confusion_matrix, ConfusionMatrixDisplay, accuracy_score, recall_score

Before training the model, there is some setting up that is required. We will need folders for the following: encoding, training, validation and unknown_frames. We also use constants to represent the rectangle color, text color, the choice of model that will be used and the name of the encoded file. We then check if these files exists, and if not, they are created. Then, we create a Queue that will be used to hold the frames for saving. We used a queue here, since we are using threads to save the images of unknown faces. Saving images is an I/O operation, and will cause lag in our application if we wait on the function that is a saving images to finish. This type of task is perfect for using threads as this is a small application. The code is shown below:

BASE_DIR = os.path.abspath(os.path.dirname(__file__))

ENCODING_FOLDER = os.path.join(BASE_DIR, "encodings")

TRAINING_FOLDER = os.path.join(BASE_DIR, "training")

VALIDATION_FOLDER = os.path.join(BASE_DIR, "validation")

UNKNOWN_FRAMES_FOLDER = os.path.join(BASE_DIR, "unknown_frames")

RECTANGLE_COLOR = "green"

TEXT_COLOR = "white"

MODEL_CHOICE = "hog"

ENCODED_FILE = "encodings.pkl"

if not os.path.exists(ENCODING_FOLDER):

os.mkdir(ENCODING_FOLDER)

if not os.path.exists(TRAINING_FOLDER):

os.mkdir(TRAINING_FOLDER)

if not os.path.exists(VALIDATION_FOLDER):

os.mkdir(VALIDATION_FOLDER)

if not os.path.exists(UNKNOWN_FRAMES_FOLDER):

os.mkdir(UNKNOWN_FRAMES_FOLDER)

# Thread-safe queue to hold frames for saving

frame_queue = queue.Queue()

The Entry Point for the Application

Below is the entry point for the program. The argparse library is used to be able to parse our command line arguments. There are three options in terms of arguments for the program.

1. python3 main.py --train for training images using the training folder.

2. python3 main.py --validate for validating the model using the validation folder.

3. python3 main.py --run for running the application using the default webcam. For the remaining sections, we will go through each functionality associated with the argument.

if __name__ == "__main__":

parser = argparse.ArgumentParser(description="App to recognize faces in webcam")

parser.add_argument("--train", action="store_true", help="Train using the images in training folder")

parser.add_argument("--validate", action="store_true", help="Validate the model using images in the validation folder")

parser.add_argument("--run", action="store_true", help="Run the application using default webcam")

# Parse all incoming arguments

args = parser.parse_args()

#if not vars(args):

#print("No arguments provided")

#print(vars(args))

if not (args.train or args.validate or args.run):

print("No arguments were given. Use python main.py --help")

sys.exit(1)

if args.train:

print("Start training model...")

encode_faces()

print("Finished training model!")

if args.validate:

print("Validating Model..")

validate_model()

print("Finished Validating Model")

if args.run:

run_app()

Training the Model

The first step is to train the model using images stored in a designated training folder. The function encode_faces below loads each image, extracts face locations, and encodes the faces into numerical vectors that the model can use for recognition. The inputs are constant variables there defined above. We start by declaring two lists for the names and encodings respectively.

I then used os.walk to traverse the training folder. However, since we only want to process the subdirectories, we skip the processing of the root directory of the training folder using if dirpath == os.path.join(BASE_DIR, TRAINING_FOLDER):continue. Once in each sub-directory, we get the path to the image and also keep track of the parent folder name where the image is located.This is why we named the image folder with the name of the user image that is stored. We need the name to construct a dictionary to store names associated with the encoding.

We then use face_recognition.load_image_file to load the image in memory. This is followed by using face_recognition.face_locations to get a list of bounding boxes (regions where the faces are located), within the image.

The function face_recognition.face_encodings is then used to take the image and the bounding boxes to crate encodings. We then loop through these encodings, appending to the names and encodings list. These two lists can now be used to create a dictionary associating the names with encodings. The pickle library is then used to save this file as encodings.pkl.

Code Breakdown:

def encode_faces(model=MODEL_CHOICE, encode_file_name = ENCODED_FILE):

names = []

encodings = []

for dirpath, dirnames, filenames in os.walk(TRAINING_FOLDER):

if dirpath == os.path.join(BASE_DIR, TRAINING_FOLDER):

continue

for filename in filenames:

image_path = os.path.join(dirpath, filename)

parent_dir_name = os.path.basename(dirpath)

# Load the image and detect faces

image = face_recognition.load_image_file(image_path)

face_bounded_boxes = face_recognition.face_locations(image, model=model)

encoded_faces = face_recognition.face_encodings(image, face_bounded_boxes)

for encoding in encoded_faces:

names.append(parent_dir_name)

encodings.append(encoding)

encodings_dict = {"names": names, "encodings": encodings}

with open(os.path.join(ENCODING_FOLDER, encode_file_name), "wb") as file:

pickle.dump(encodings_dict, file)

Validating the Model

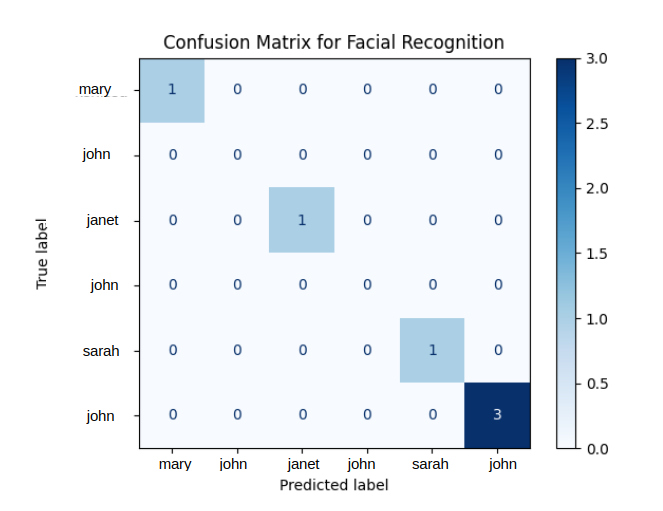

Once the model is trained, it’s important to validate its performance using a set of validation images. This step ensures that the model is capable of identifying faces accurately. Let use breakdown the function validate_model(), that is used to validate our model. We begin by loading the saved encodings for our model. We then create two lists, y_true and y_pred to store the correct names of the validation images and the names predicted by the model based on the encodings. The next step is to loop through the files in our validation folder, load the images and get the face locations and encodings using face_recognition.face_locations and face_recognition.face_locations respectively. The _match_face function is a private function that is able to take the encodings and check if it matches any of the saved encodings.If a match is found, then the name is returned. The line filename = filename.split('_')[0] is used to grab the exact name since in the validation folder there can be multiple images with the same name and hence is stored in the form for eg.john_1 and john_2. We then append the actual name in the y_true and the predicted name in y_pred. If the name is not found, the string UNKNOWN is returned. The _match_face() function will be shown below validate_model(). The next step is the calculate the accuracy and recall. Our model is performing perfectly, but remember that the sample size is small (Accuracy: 1 and Recall: 1). Finally, we display a confusion matrix to visualize the performance of the validation. The diagonal elements will be the True Positives and again it shows a perfect performing model. There are some zeros on the diagonal, but these are representing the same individual John that was already processed. There are 3 entries for John, so that's why we have replication. This will be addressed in the future.

Code Breakdown:

def validate_model():

with open(os.path.join(ENCODING_FOLDER, ENCODED_FILE ), "rb") as file:

registered_face_encodings = pickle.load(file)

y_true = []

y_pred = []

for filename in os.listdir(VALIDATION_FOLDER):

input_image_file = face_recognition.load_image_file(os.path.join(VALIDATION_FOLDER, filename))

input_face_locations = face_recognition.face_locations(input_image_file, model=MODEL_CHOICE)

input_face_encodings = face_recognition.face_encodings(input_image_file, input_face_locations)

face_name = _match_face(input_face_encodings, registered_face_encodings)

filename = filename.split("_")[0]

y_true.append(filename)

y_pred.append(face_name[0])

accuracy = accuracy_score(y_true, y_pred)

print(f'Accuracy : {accuracy: .2f}')

recall = recall_score(y_true, y_pred, average='micro')

print(f'Recall: {recall: .2f}')

cm = confusion_matrix(y_true, y_pred, labels=y_true)

disp = ConfusionMatrixDisplay(confusion_matrix=cm, display_labels=y_true)

disp.plot(cmap=plt.cm.Blues)

plt.title('Confusion Matrix for Facial Recognition')

plt.show()

The _match_face function

def _match_face(video_face_encodings, registered_face_encodings, frame=None):

face_names = []

for face_encodings in video_face_encodings:

boolean_matches = face_recognition.compare_faces(

registered_face_encodings["encodings"], face_encodings

)

name = "Unknown"

face_distances = face_recognition.face_distance(registered_face_encodings["encodings"], face_encodings)

top_match_index = np.argmin(face_distances)

if boolean_matches[top_match_index]:

name = registered_face_encodings["names"][top_match_index]

# if face is uknown then put frame in queue to be saved

if name == "Unknown":

print("Unknown face detected sending to queue to be processed")

frame_queue.put(frame)

face_names.append(name)

return face_names

Running the Real-Time Application

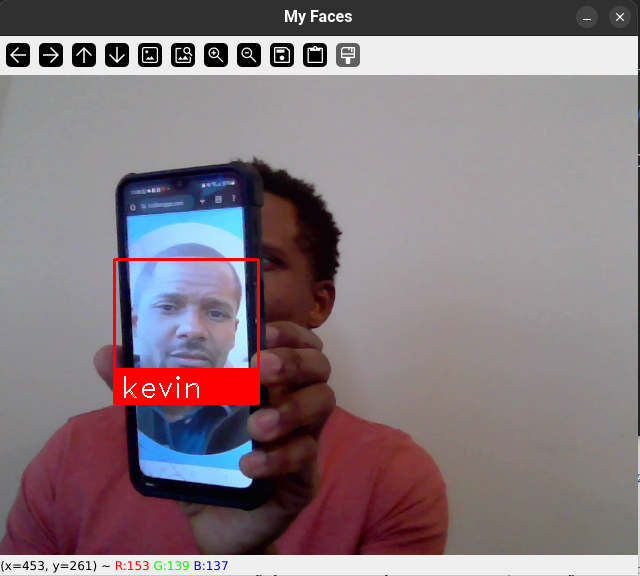

After training and validating the model, the application can now be used for real-time face recognition. It captures frames from the webcam, detects faces, and compares them against the trained encodings. If the face is recognized, the corresponding name is displayed. If the face is not recognized, then it is stored in a folder by the name unkown_frames. The run_app function, has quite a bit of code, and it will help if you have used OpenCV before. We start by defining and starting our thread that will run the _save_frame function. The purpose, as the name suggests, is just to save a frame. This function is called to check if a unknown image is in the queue, and if there is one, it will save it as unknown.jpg (A better option here would be to include the timestamp in the filename, to prevent overwriting. But for this simple example, we will just give the image one name. I will update it in the future.). The next step is to load up the encodings as we have done before. We then define a variable handle_current_frame that is used to control how often we we process a frame (we skip every other frame). This reduces the number of frames that we process as it takes quite a bit of resources to process every frame. Additionally, immediately between each frames, there are often minor differences. The line cap = cv2.VideoCapture(0) is used to open the default camera on your computer or the default camera connected to the system. A while loop is then used to constantly read each frame with ret, frame = cap.read().

We then check handle_current_frame and use resize from cv2 to reduce the size of the frame by 25%. This also helps with speeding up the recognition process. The next step is to use cv2.cvtColor to convert from OpenCV's BGR color format to RGB as this is required for the facial recognition library. The next two lines are lines we have seen before. They will detect the the locations (bounding boxes) and then generate encodings. Once the encodings are generated, we can use _match_face again, to match the encodings from the video to the registered encodings. A new private function is introduced here _draw_face which serves to draw a box around the face with the name of the user or the string 'unknown'. The line handle_current_frame = not handle_current_frame is used to toggle handle_current_frame so we can skip every other frame. The function cv2.imshow('My Faces', frame) is then used to display the current frame in a window with the title My Faces. The function cv2.waitKey(1) is used to wait for a key press for 1ms and check if the key 'q' is pressed to exist the loop and stop the webcam feed. We use frame_queue.put(None) to signal to stop saving frames (There is a check in _save_frame() that will break if it receives None). When we use thread.join(), this will allow the program to wait on the _save_frame() to finish processing before exiting. The two last lines are responsible for releasing the webcam and closing the OpenCV window.

Code Breakdown:

def run_app():

# Starting the frame-saving thread

thread = threading.Thread(target=_save_frame)

thread.start()

with open(os.path.join(ENCODING_FOLDER, ENCODED_FILE ), "rb") as file:

registered_face_encodings = pickle.load(file)

# variable to control how often to process

handle_current_frame = True

# Reference to webcam

cap = cv2.VideoCapture(0)

while True:

# Get a single frame of the video

ret, frame = cap.read()

if handle_current_frame:

#Resize the frame. This speeds up processing

small_frame = cv2.resize(frame, (0,0), fx=0.25, fy=0.25)

# convert the image from OpenCV BGR format to face_recognition RGB format

rgb_frame = cv2.cvtColor(small_frame, cv2.COLOR_BGR2RGB)

# find video faces locations and encondings for current frame

video_face_locations = face_recognition.face_locations(rgb_frame, model=MODEL_CHOICE)

video_face_encodings = face_recognition.face_encodings(rgb_frame, video_face_locations)

face_names = _match_face(video_face_encodings, registered_face_encodings, frame)

_draw_face(video_face_locations, face_names, frame)

# switch handle_current_frame variable

handle_current_frame = not handle_current_frame

# show image

cv2.imshow('My Faces', frame)

# when 'q' is pressed application will break out of the while loop

if cv2.waitKey(1) == ord('q'):

break

#Signal the saving thread to finish

frame_queue.put(None)

# wait for the saving thread to finish

thread.join()

cap.release()

cv2.destroyAllWindows()

Function _save_frame

def _save_frame():

while True:

unknown_frame = frame_queue.get()

#Frame will be none at the end of the program as this is manually set

if unknown_frame is None:

break

#TODO: use timestamps to save image

cv2.imwrite(os.path.join(UNKNOWN_FRAMES_FOLDER, 'unknown.jpg'), unknown_frame)

frame_queue.task_done()

Function _draw_face

def _draw_face(face_locations, face_names, frame):

#for (top, right, bottom, left), name in zip(face_locations, face_names):

#locations is a tuple

for locations, name in zip(face_locations, face_names):

# remember we resized the frame for faster processing. So now we have to scale back the locations before drawing

top, right, bottom, left = map(lambda x: x*4, locations)

# Draw a box around the face

cv2.rectangle(frame, (left, top), (right, bottom), (0, 0, 255), 2)

# Draw a label with name

cv2.rectangle(frame, (left, bottom - 35), (right, bottom), (0, 0, 255), cv2.FILLED)

font = cv2.FONT_HERSHEY_DUPLEX

cv2.putText(frame, name, (left + 6, bottom - 6), font, 1, (255, 255, 255), 1)

Screenshot of Running Application

I did quite a number of tests with even younger pictures and in almost 99.9% of the time scenarios, the model was accurate.

Conclusion

In this blog, we've walked through the process of creating a face recognition system that can train, validate, and run in real-time.

This example also demonstrates how facial recognition can be integrated into various use cases, such as security systems, authentication systems, or even personal projects. The key takeaway is that with just a few libraries and a bit of Python code, you can build an effective facial recognition system. There are some improvements to the code as mentioned in the blog, but this is a good start for a security facial recognition system. In the future, I will look at saving without overwriting and also increasing the dataset and perform more tests.